Top 10 LLM Tracking Tools for AI Visibility (2026)

LLM Tracking Tools for AI Visibility: 1.Dageno 2.AIclicks 3.Profound 4.Peec AI. Learn More

Track AI search visibilityNo install

Updated by

5 Min Read

Updated on Feb 05, 2026

LLM tracking tools are quickly becoming the new visibility layer for modern search.

When users research products inside ChatGPT, Gemini, Claude, or Perplexity, they don’t see ten blue links—they see a single synthesized answer. That answer decides:

- which brands are mentioned,

- which sources are cited,

- and which competitors are positioned as “best”.

LLM tracking tools give you visibility into that layer—what’s working, what’s missing, and where competitors are getting ahead.

Below are the 10 most capable LLM tracking tools in 2026, reviewed with consistent depth so you can actually decide which one fits your team.

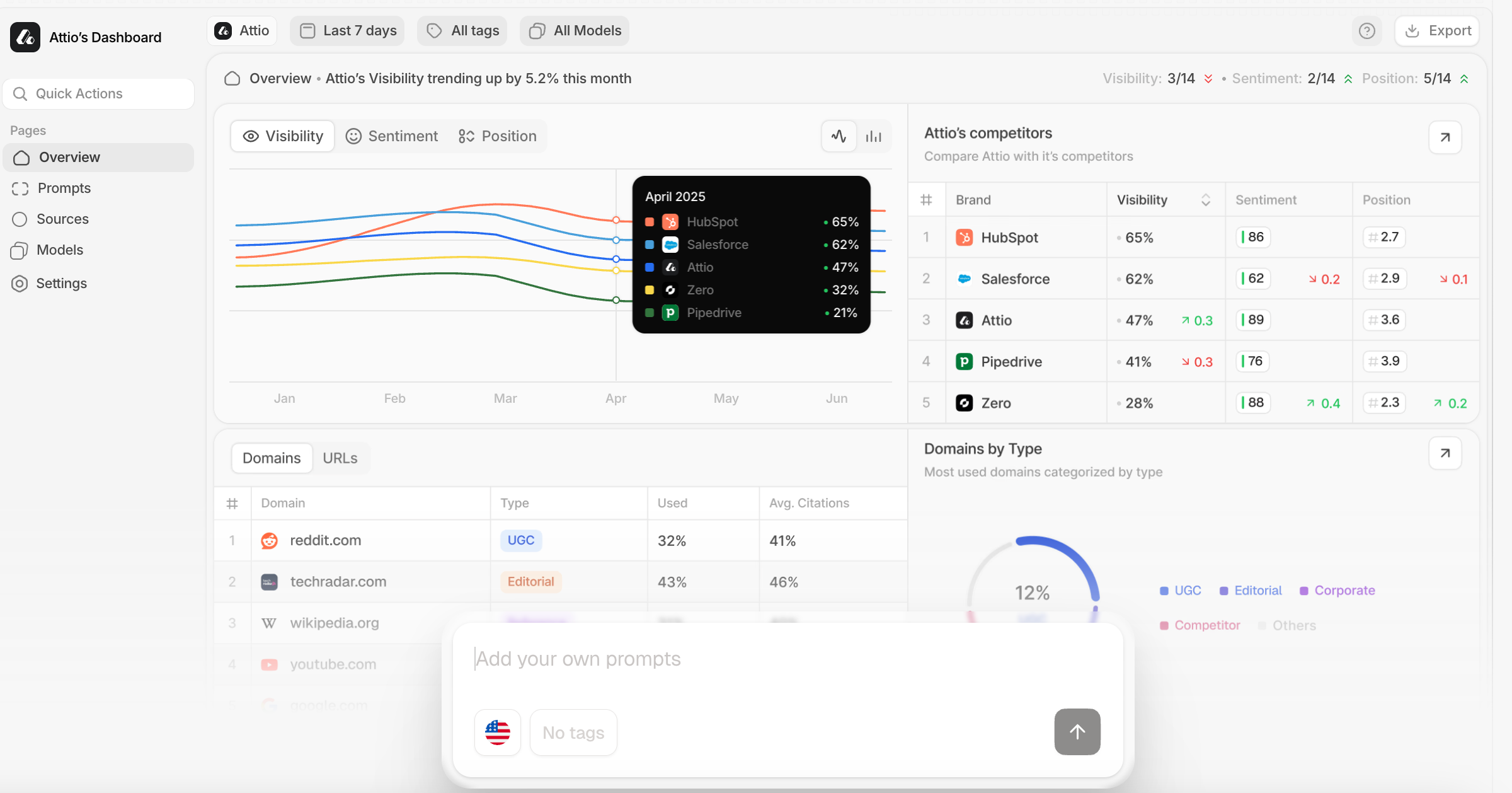

1. Dageno AI — Best Overall GEO & LLM Visibility Platform

Best for:

Marketing, SEO, and content teams that want a systematic, long-term solution to control how AI answer engines understand, select, and cite their content.

About the tool

Dageno AI is a dedicated Generative Engine Optimization (GEO) platform designed to help brands become consistently visible, accurately represented, and preferentially cited across AI answer engines.

Instead of treating LLM visibility as isolated prompts or experiments, Dageno approaches it as a search system problem—similar to how modern SEO evolved beyond keyword rank tracking.

Dageno focuses on how LLMs:

- evaluate authority,

- verify information,

- choose citations,

- and prioritize sources in AI-generated answers.

What Dageno actually does

Dageno monitors brand presence across major AI answer engines (such as ChatGPT, Gemini, Perplexity, and Claude) and connects that visibility data with actionable GEO insights.

It helps teams understand:

- why competitors are cited instead of them,

- which content structures LLMs prefer,

- and what needs to change to improve AI-level visibility—not just surface mentions.

Key features

- AI visibility tracking: Monitor brand mentions, citations, and positioning across major AI answer engines

- Citation analysis: Identify which URLs and sources AI systems trust most

- Competitive benchmarking: Compare visibility share and citation frequency against competitors

- Content understanding signals: Analyze structure, clarity, and authority from an LLM perspective

- GEO strategy insights: Translate tracking data into optimization priorities

Pros

- Designed specifically for GEO, not retrofitted from SEO

- Focuses on how AI systems reason, not just outputs

- Strong competitive and citation-level insight

- Built for long-term AI visibility, not short-term prompt testing

Who should use Dageno AI

If your goal is to systematically influence AI-generated answers at scale, rather than just observe them, Dageno is the strongest foundation.

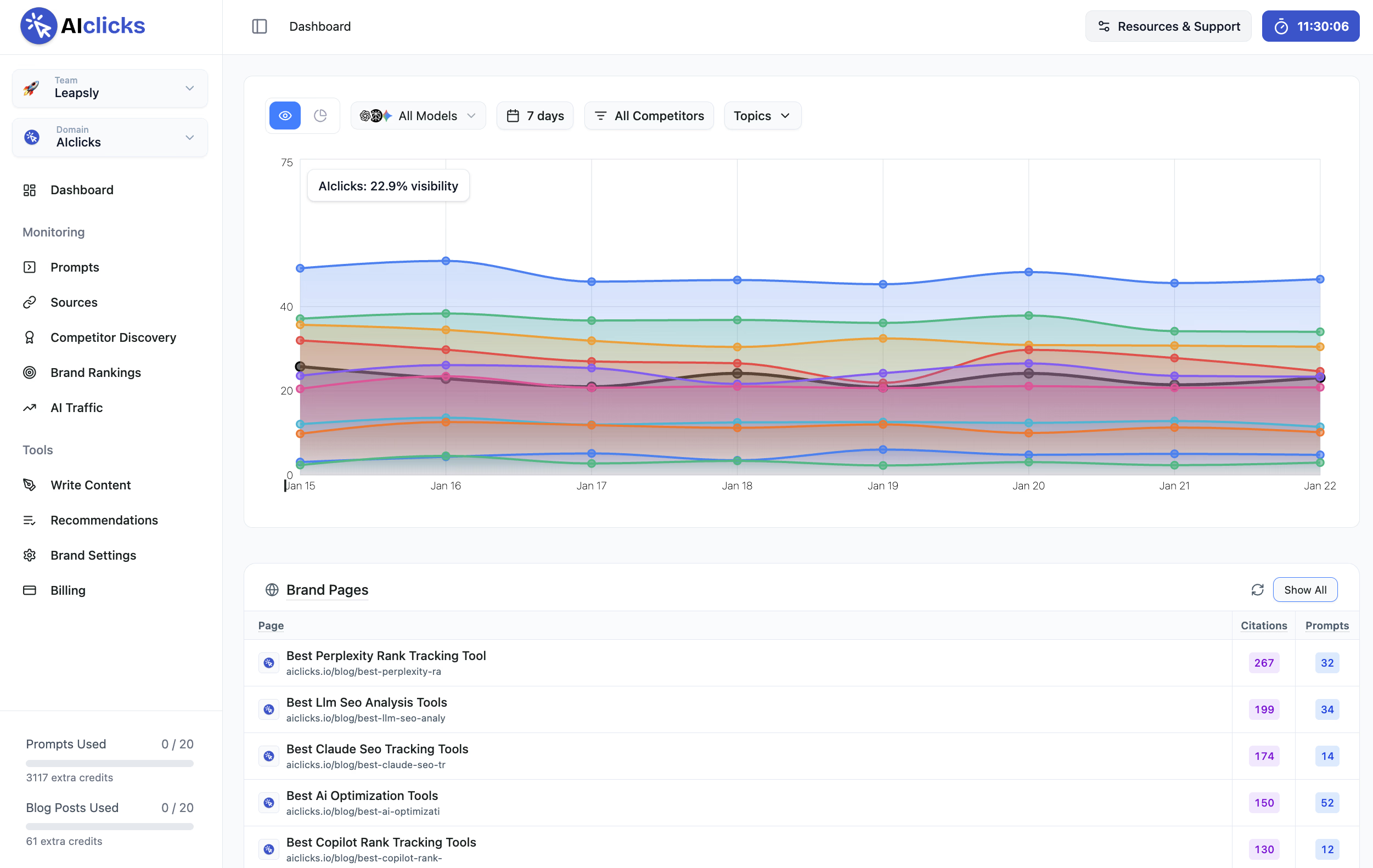

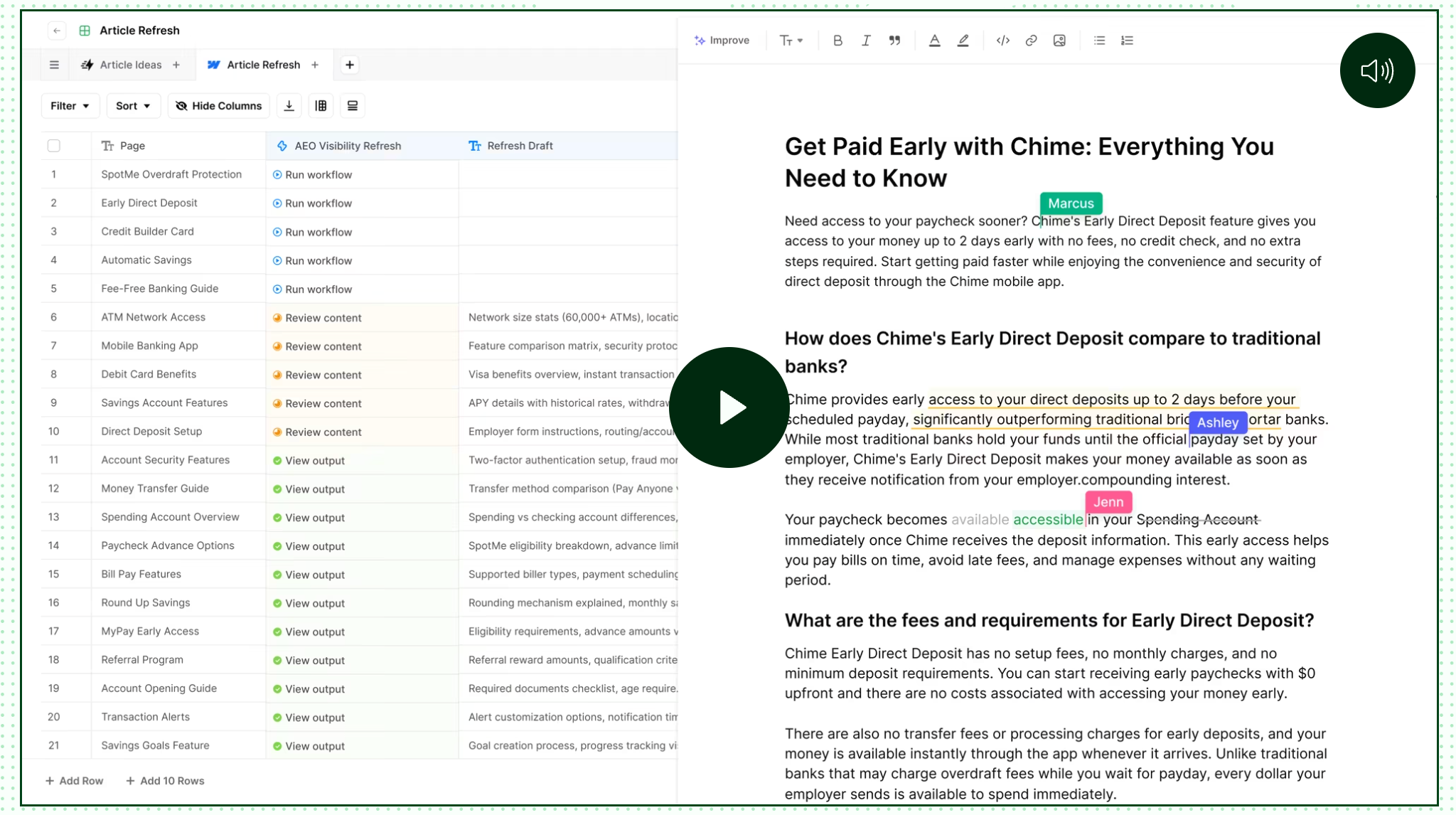

2. AIclicks — Best for Monitoring + Automated Content Execution

Best for:

SEO teams that want LLM visibility tracking combined with AI-generated content to close citation gaps.

About the tool

AIclicks tracks how brands appear across AI answer engines like ChatGPT, Gemini, Google AI Overviews, and Perplexity. It pairs monitoring with execution, using AI agents to generate content where visibility gaps exist.

What it actually does

AIclicks identifies missing or weak visibility areas, then provides:

- competitive audits,

- topic-level recommendations,

- and automated content creation to address missed citations.

Key features

- Brand mention and citation tracking across AI answer engines

- Competitive benchmarking and AEO monitoring

- AI-generated content to target citation gaps

- Weekly visibility and optimization recommendations

Pros

- Combines tracking with execution

- Clear visibility audits

- Helpful for teams moving fast on content

Cons

- Higher prompt volumes require higher-tier plans

Pricing

Starts at $79/month, with higher tiers unlocking more prompts and engines.

Who should use it

Teams that want monitoring plus hands-on content output, without building a GEO process from scratch.

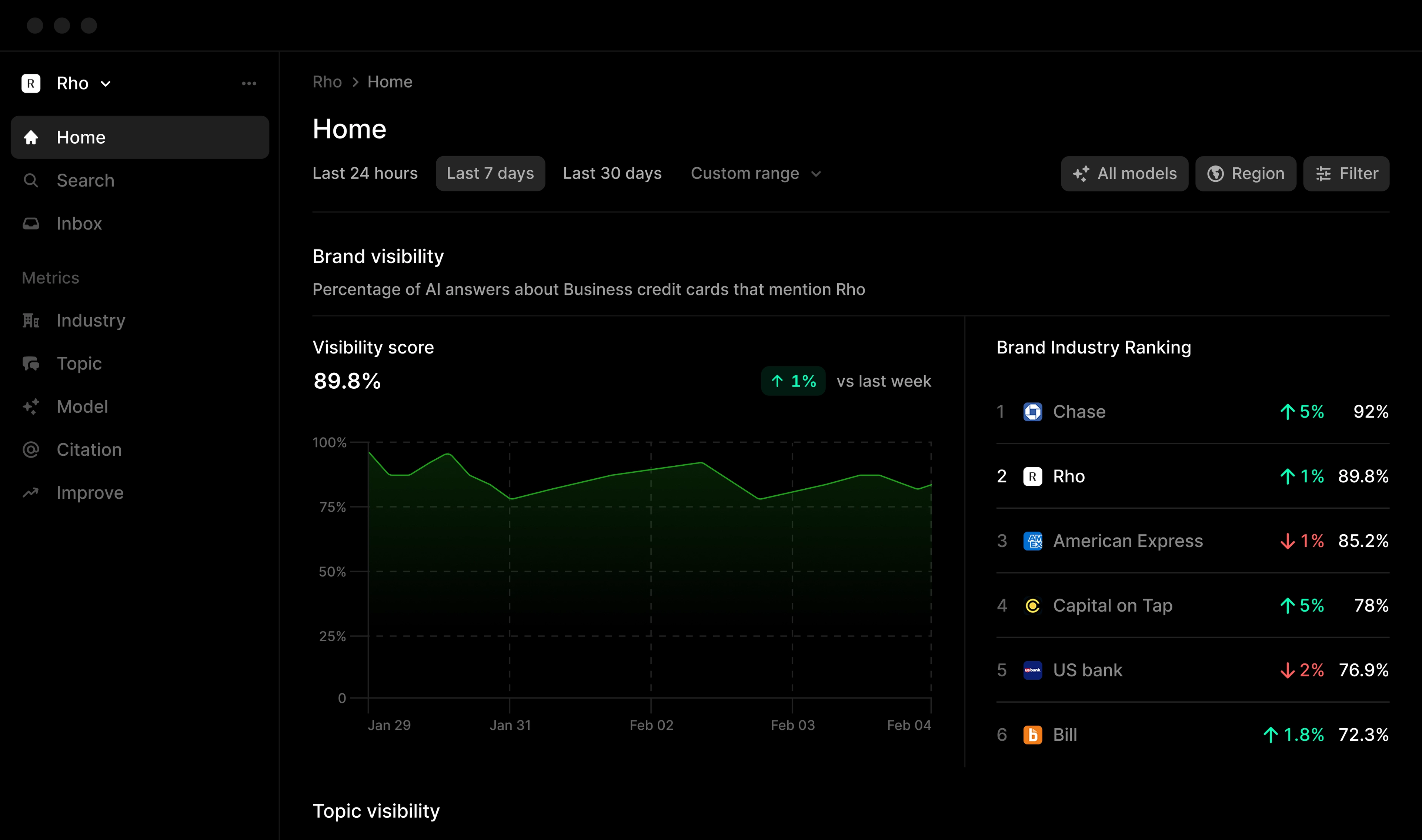

3. Profound — Best for Enterprise-Scale LLM Intelligence

Best for:

Large organizations that need deep visibility analytics across many AI answer engines.

About the tool

Profound is a monitoring-first LLM visibility platform built for enterprise use. It tracks visibility across 10+ AI systems and offers deep insight into real user prompts through its Conversation Explorer.

What it actually does

Profound shows how brands appear across a massive dataset of real AI interactions, helping enterprises understand:

- prompt trends,

- share of voice,

- and brand positioning at scale.

Key features

- Visibility, sentiment, and citation tracking across 10+ engines

- Conversation Explorer with large prompt datasets

- Competitor benchmarking and branded answer analysis

- AI agent and crawler behavior insights

Pros

- Extremely broad engine coverage

- Strong enterprise credibility

- Unique prompt-level intelligence

Cons

- Monitoring-focused; limited optimization workflows

- No free trial

Pricing

Starts at $99/month for limited coverage; enterprise plans required for full access.

4. Peec AI — Best Lightweight LLM Monitoring Tool

Best for:

SMBs and agencies that want simple, fast AI visibility tracking.

About the tool

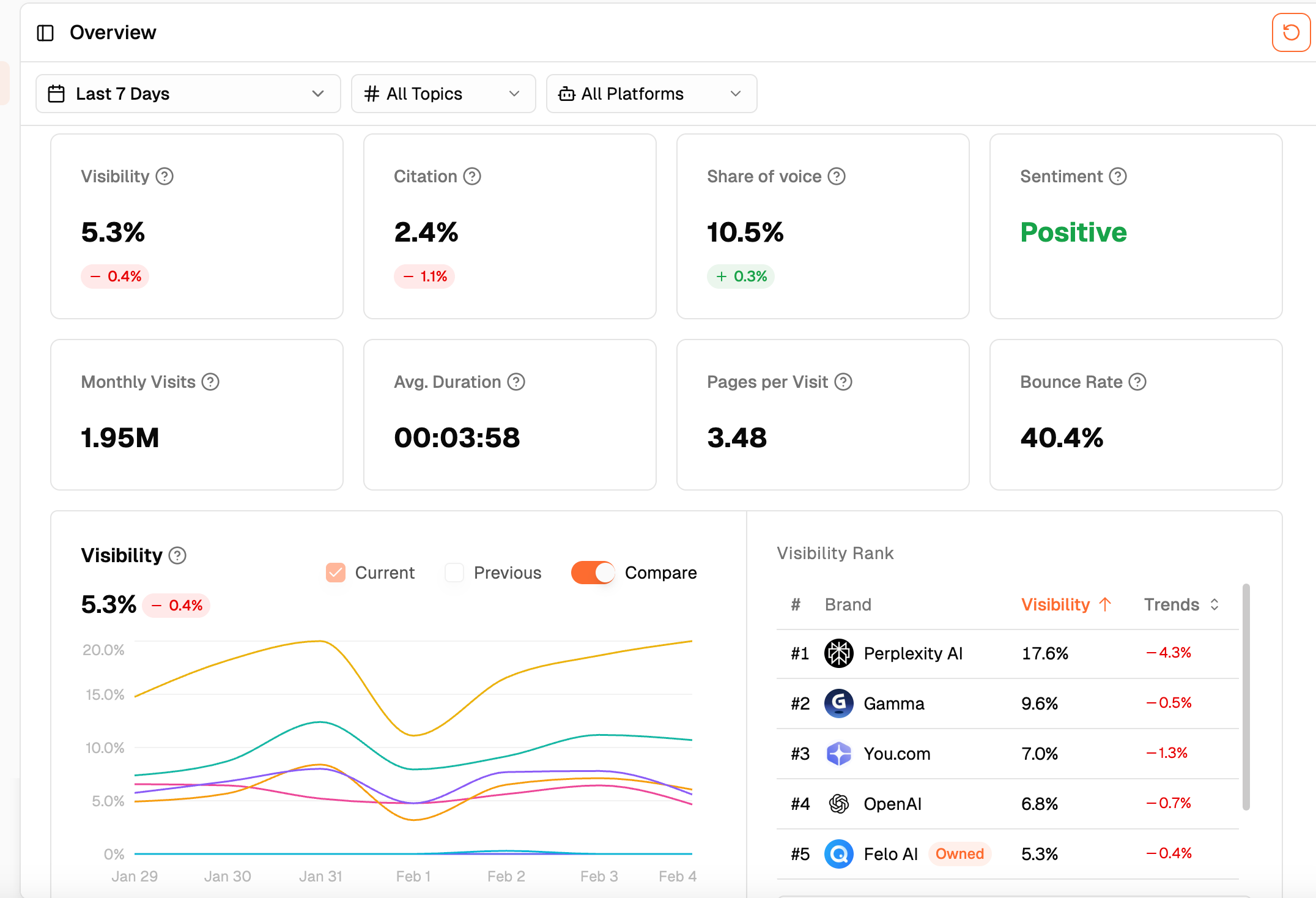

Peec AI focuses on clean UX and fast onboarding, providing monitoring across selected AI answer engines.

What it actually does

Peec tracks mentions, sentiment, citations, and competitors—but stops short of execution or optimization.

Key features

- Brand and competitor visibility tracking

- Citation and source tracing

- Prompt organization

- Multi-language monitoring

Pros

- Easy to use

- Affordable entry pricing

- Agency-friendly

Cons

- Monitoring-only

- Limited engine coverage unless add-ons are purchased

Pricing

Starts at €89/month for limited engine access.

5. Scrunch AI — Best for Persona-Based Visibility & Crawl Insights

Best for:

Teams that want to understand how different user personas experience AI answers.

About the tool

Scrunch AI tracks LLM visibility while also analyzing AI crawler behavior. Its Agent Experience Platform (AXP) helps AI systems better understand site content.

Key features

- Persona-based prompt segmentation

- AI crawler analytics

- Citation frequency analysis

- Domain-level visibility insights

Pros

- Strong crawler intelligence

- Persona-driven insights

- Useful for technical GEO work

Cons

- Monitoring-heavy

- Limited optimization depth

Pricing

Starts around $300/month.

6. AthenaHQ — Best for Multi-Region & Industry-Specific GEO

Best for:

Brands operating across multiple countries or verticals.

About the tool

AthenaHQ emphasizes regional AI visibility, using ML-powered prompt estimation to show how exposure varies by market.

Key features

- Region-based AI visibility tracking

- Industry benchmarking

- Prompt demand estimation

- Competitive impersonation mode

Pros

- Excellent geographic insights

- Strong vertical segmentation

Cons

- Limited execution tools

- Newer platform

7. Otterly AI — Best Budget Entry into LLM Monitoring

Best for:

Startups and solo teams starting with AI visibility.

About the tool

Otterly AI tracks mentions and citations across major AI answer engines with minimal setup.

Key features

- Automated prompt runs

- Citation detection

- Simple dashboards

Pros

- Very affordable

- Easy to adopt

Cons

- Monitoring-only

- Limited depth for advanced GEO

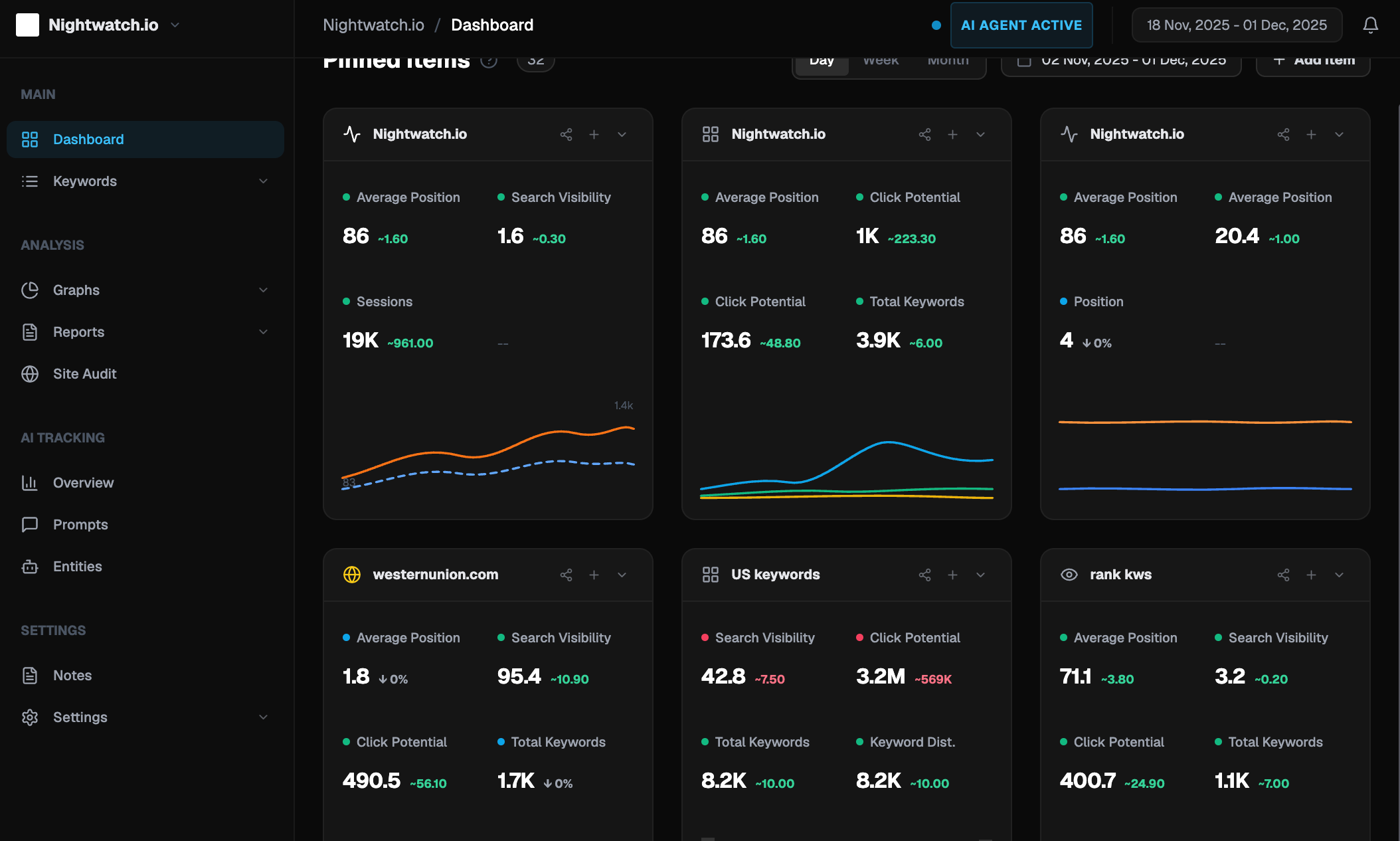

8. Nightwatch — Best Hybrid SEO + LLM Tracking

Best for:

SEO teams bridging traditional rankings and AI-generated answers.

Key features

- Keyword rank tracking

- SERP feature monitoring

- AI answer visibility insights

Pros

- Strong SEO foundation

- Unified reporting

Cons

- GEO optimization depth is limited

9. Goodie — Best AEO-Focused Platform

Best for:

Teams building Answer Engine Optimization–first workflows.

Key features

- LLM visibility tracking

- AI crawler analytics

- AI-optimized content creation

Pros

- Strong execution focus

- Designed for AEO use cases

Cons

- No public pricing

- More complex setup

10. AirOps — Best for LLM Visibility + Content Ops Automation

Best for:

Large teams scaling AI-driven content workflows.

Key features

- Visibility signals tied to content workflows

- CMS integrations

- AI-powered publishing pipelines

Pros

- Powerful automation

- Strong for agencies and enterprises

Cons

- Steeper learning curve

- Overkill for simple monitoring

Get #1 LLM Visibility Tracking Tool – Dageno AI

As AI answer engines increasingly replace traditional search results, visibility is no longer about rankings alone—it’s about being selected, cited, and trusted inside AI-generated answers.

Dageno AI is built specifically for this shift. Instead of treating LLM visibility as isolated prompts or surface-level mentions, Dageno helps brands systematically understand how AI answer engines interpret their content, compare competitors, and decide which sources to cite.

For teams serious about Generative Engine Optimization (GEO), Dageno provides a structured way to track AI visibility, analyze citation patterns, benchmark competitors, and translate insights into long-term visibility gains across AI-driven search experiences.

Frequently Asked Questions about LLM Tracking Tools

What are LLM tracking tools?

LLM tracking tools monitor how large language model–powered answer engines mention, describe, and cite brands in AI-generated responses. They help teams understand visibility, accuracy, and competitive positioning inside AI-driven search results rather than traditional SERPs.

Why is LLM visibility tracking important for SEO and GEO?

Because users increasingly rely on AI answers for research and decision-making, brands that are not visible or accurately represented in these answers lose influence early in the funnel. LLM tracking tools reveal gaps that traditional SEO tools cannot detect.

How are LLM tracking tools different from traditional SEO tools?

Traditional SEO tools focus on keyword rankings and traffic from search engines. LLM tracking tools focus on mentions, citations, sentiment, and source selection inside AI-generated answers, which follow different ranking and trust mechanisms.

Which teams benefit most from LLM tracking tools?

Content teams, SEO teams, product marketers, and B2B brands benefit most—especially those operating in competitive markets where AI answers strongly influence product comparison, brand trust, and buying decisions.

Why choose Dageno AI over other LLM tracking tools?

Dageno AI is designed for systematic GEO, not just monitoring. It goes beyond surface visibility by helping teams understand why AI systems select certain sources and how to improve long-term citation and trust across AI answer engines.

About the Author

Updated by

Ye Faye

Ye Faye is an SEO and AI growth executive with extensive experience spanning leading SEO service providers and high-growth AI companies, bringing a rare blend of search intelligence and AI product expertise. As a former Marketing Operations Director, he has led cross-functional, data-driven initiatives that improve go-to-market execution, accelerate scalable growth, and elevate marketing effectiveness. He focuses on Generative Engine Optimization (GEO), helping organizations adapt their content and visibility strategies for generative search and AI-driven discovery, and strengthening authoritative presence across platforms such as ChatGPT and Perplexity